What is Data Engineering? Different concepts.

Define Data Engineering, different data roles and responsibilities.

Data Engineering Free Course #3

In the last 2 episodes, we understood the value of Data in business. let us understand how this value is unlocked.

We know every business is trying to make sense of its data. Now the data that is collected is never in a clean shape. Hence every business needs to have a basic Data Platform and architecture in place to unlock value from Data. In absence of a matured Data Architecture, companies can never move towards unlocking value, via Machine learning or Data Science.

Recap: In week 2 we explored the impact of data on various industries through real-life case studies. We also discussed what data professionals can learn from these case studies to better prepare for business rounds in interviews.

Data Engineers are responsible to provide this basic foundation of Data Infrastructure. They collect the requirements to understand the basic needs of a Data Platform. Depending on the maturity of the data in the company, they take the decision about the infrastructure to use, and tools needed to deliver business rules and build the basic pipeline to process the data.

Let us look at it in more detail:

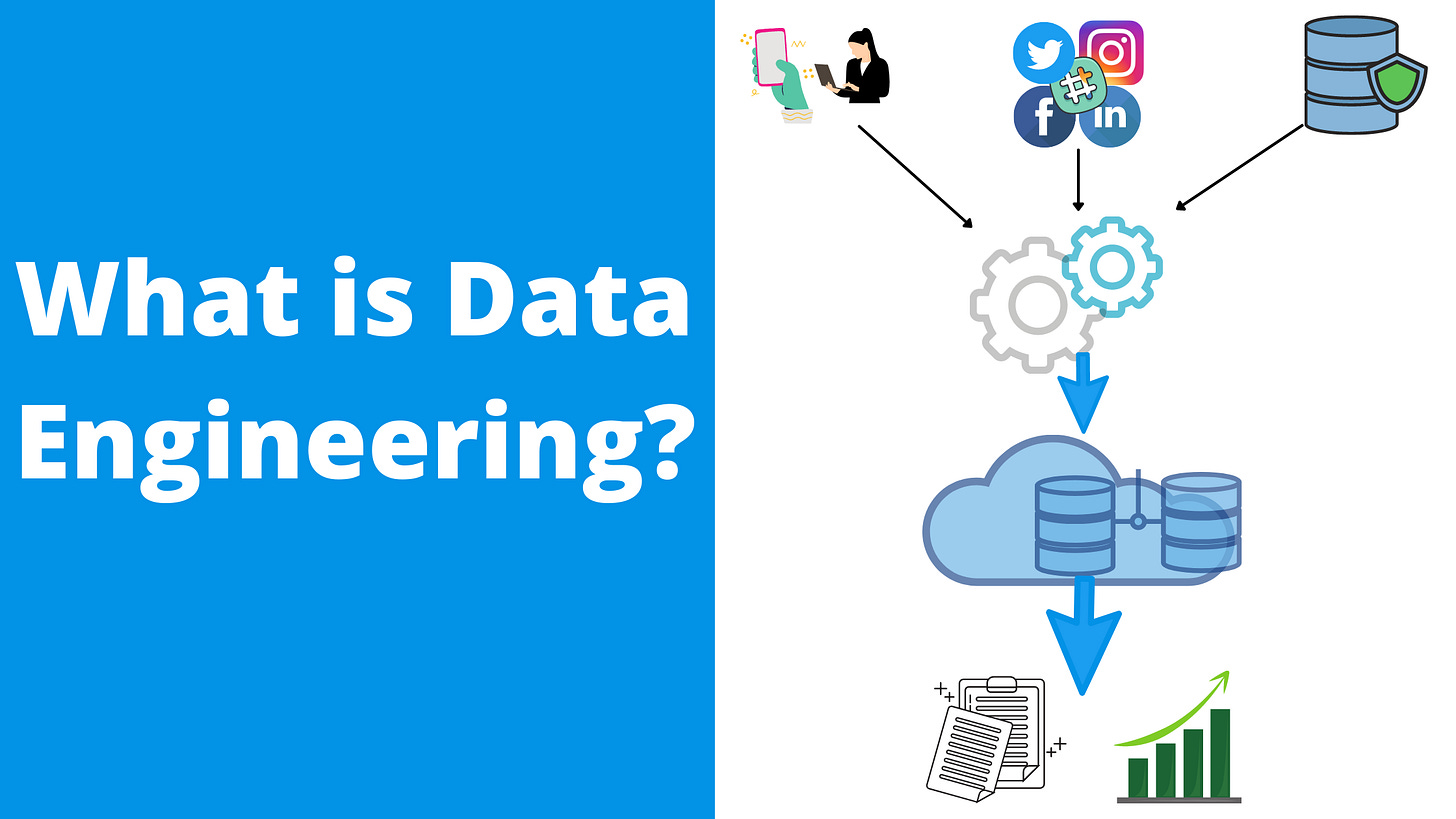

Data engineering is the process of collecting, storing, and processing data so that it can be easily analyzed and used by other software applications or people. It involves building and maintaining the infrastructure and tools needed to move data from various sources into a centralized location, transform the data into a format that can be used by other systems, and then make that data available for analysis and reporting.

For example, let's say you run an e-commerce website that sells clothing. You might have data about the products you sell, the customers who buy them, and the orders that are placed. Data engineering would involve building and maintaining the infrastructure and tools needed to collect that data from various sources, such as your website and social media, store it in a database, transform it into a format that can be easily analyzed, and then make it available for use by other systems or people who need to analyze it, such as your marketing team or data analysts.

What are different Data Roles then?

It's too much of an ask to explain, so...

let's ask ChatGpt to explain what Data Engineering is and what the role of different Data Roles are.

Me: Hi, can you explain to me what Data Management is?

ChatGPT: Sure! Data Management is like running a restaurant, where data is like the ingredients used to create the dishes. It's the process of organizing, storing, protecting, and maintaining the data used by an organization to ensure that it's accurate, complete, and available when it's needed.

Me: Ah, I see. So what role does a Data Engineer play in Data Management?

ChatGPT: A Data Engineer is like a sous chef in a restaurant. They are responsible for setting up the data infrastructure, developing ETL pipelines, and building the data platform that the data scientists and analysts use. They make sure that the data is stored, processed, and managed correctly.

Me: I see. And what about ETL developers then? If Data Engineers are doing everything, what would they do?

ChatGPT: ETL developers are like the line cooks in a restaurant. They take the prepared ingredients and combine them to create the final dishes. They are responsible for the actual movement and transformation of data from one system to another. They make sure that the data is properly transformed and formatted so that it can be used by data analysts and scientists.

Me: Interesting. What about Data Analysts?

ChatGPT: Data Analysts are like the servers in a restaurant. They are responsible for analyzing the data to extract insights and make recommendations based on those insights. They use data visualization tools to communicate the findings to the stakeholders.

Me: I see. And finally, what role does a Data Scientist play in Data Management?

ChatGPT: A Data Scientist is like the head chef in a restaurant. They are responsible for developing predictive models, running experiments, and creating new products or features based on the insights they extract from the data. They use statistical and machine learning techniques to analyze the data and make predictions about future trends.

I guess you now have a fairer understanding of the division of the work of different data roles.

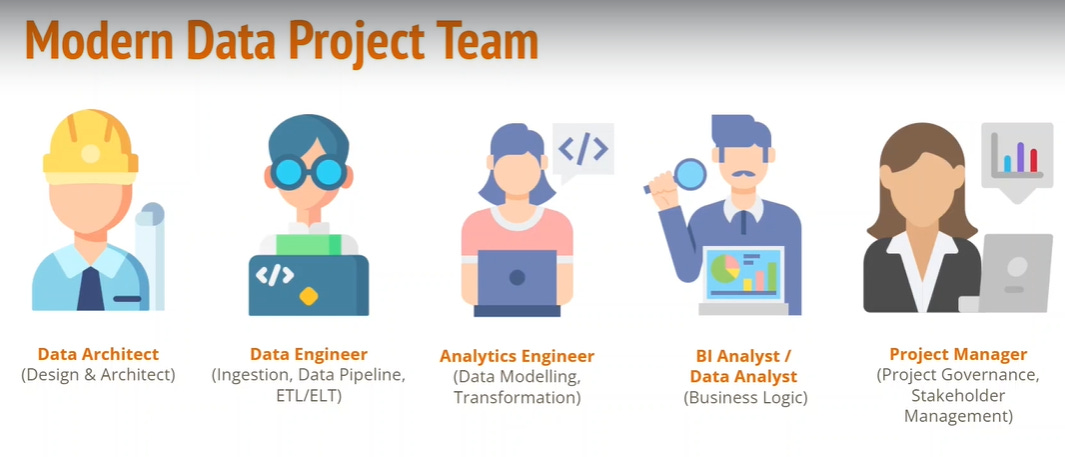

Let's review the below diagram for a little bit more perspective on different data roles.

In this image, we have 4 different roles in Data Architect, Data Engineer, Analytics Engineer, and BI Analyst or Bi developer, etc. However, based on the Data Maturity of the companies we can have many of these roles clubbed into 1/2 roles.

For Ex: Companies that have just started to build their Data infrastructure will have 1/2 persons doing almost all the 4 roles.

As the company grows slowly the Data Architects will be differentiated from Data Engineers, as Data Architects focus more on designing and understanding the complete data landscape of the company Data Engineers will mainly focus on building the infrastructure, the pipelines, and the custom code needed for data processing.

As the companies grow, SQL focussed and programming focussed data engineers move to Data Modelling and building/scaling pipelines respectively.

Now that we understand different data roles, let us explore the Data Engineering Lifecycle.

Data Lifecycle:

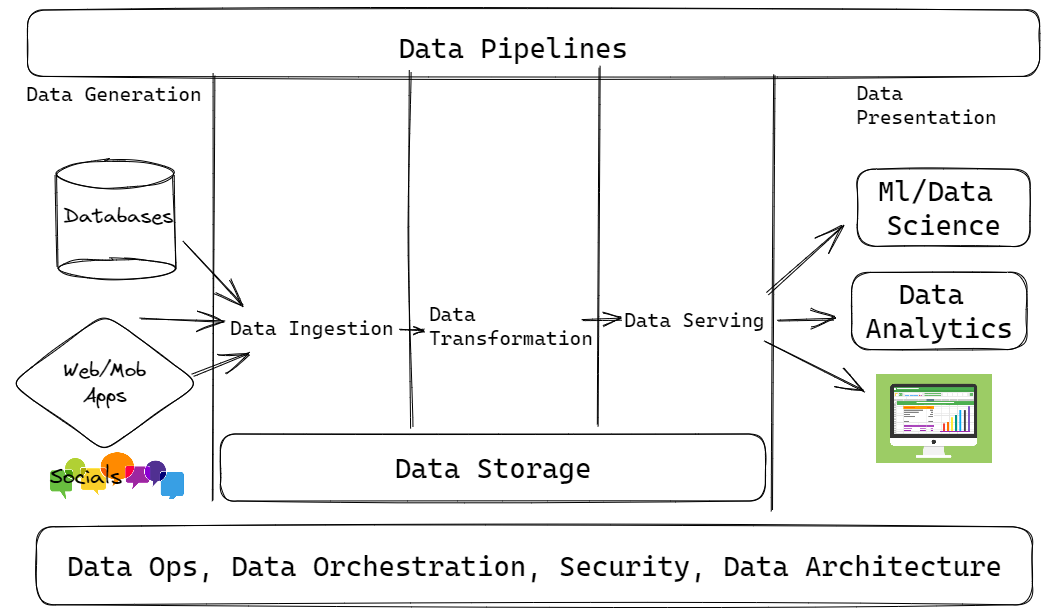

The Data lifecycle refers to the complete steps of different activities data goes through in an organization. It encompasses all the steps, starting from collecting or generating data to processing it, cleaning, transforming it to make it useful, deriving insights, and ultimately archiving the data.

Here are the key components:

Data Generation: This phase includes different applications that generate for a business. This may include different software, like CRM/Web App/Application Database, mobile software, etc. This also includes different sensor-generated data in IOT, social media data generated through user comments/reviews, etc. Basically, any system that generates data related to the business is part of Data Generation.

Data Ingestion: This refers to the stage of reading data from different sources and writing it into any kind of temporary storage system. The storage can either be a file system or an object storage or even a database system.

Data Transformation: Often the data generated is not in a clean state, this might have duplicate or outdated data. Some structures of the data might not be extremely suited for further processing or Data analysis. Hence in this phase data is first cleansed, and then several business rules are applied to the data. Before being loaded the data is modeled to mimic the business and its related components as an entity. Several transformations are applied, like adding/removing columns, standardizing the data, and aggregating or denormalizing for reporting purposes.

Data Serving: The clean and curated data is now loaded into systems or tables for downstream systems to read. Data Analysts write different queries, ML engineers run different experiments, and several different reports are created from this data. In order for these users to have access to such clean data, the data Serving layer is used either through direct access or materialized views.

Data Presentation: Finally the reporting tools and different codes from Data Science/Analyst teams visualize the data to make sense of it, and identify patterns. This is termed as Data Presentation layer.

Data Pipeline is the process that combines all of the above 5 stages. A Data Pipeline is often used as an abstract term to refer to the orchestration of all 5 stages as one coherent pipeline, that moves data from one stage to the next.

The Data Pipelines are often represented as a Directed Acyclic Graph (DAG). A DAG is a Directed Acyclic Graph — a conceptual representation of a series of activities, or, in other words, a mathematical abstraction of a data pipeline.

Read more about it here, in this blog from Astronomer.

Critical to all these Data Stages are some inherent properties and functions. These are not direct stages of a data lifecycle. However, they enable different aspects of a Data Lifecycle. These are Data Ops, Orchestration, Data Security, and Data Architecture. For the sake of simplification, let's not worry about these rights now. We will discuss them in a later section.

We will continue to delve deeper into different types of data and different concepts related to Data Engineering next week.

References:

If you have not yet checked the last 2 episodes, read them here:

Whenever you’re ready, there are 3 ways I can help you in your Career Growth:

Let me help you mentor with your career journey here.

Grow Your LinkedIn brand and get diverse here.

Take charge of your career growth here.

Book some time with me: https://topmate.io/saikatdutta